kubernetes的节点调度cordon uncordon drain

2022-09-16

11 min read

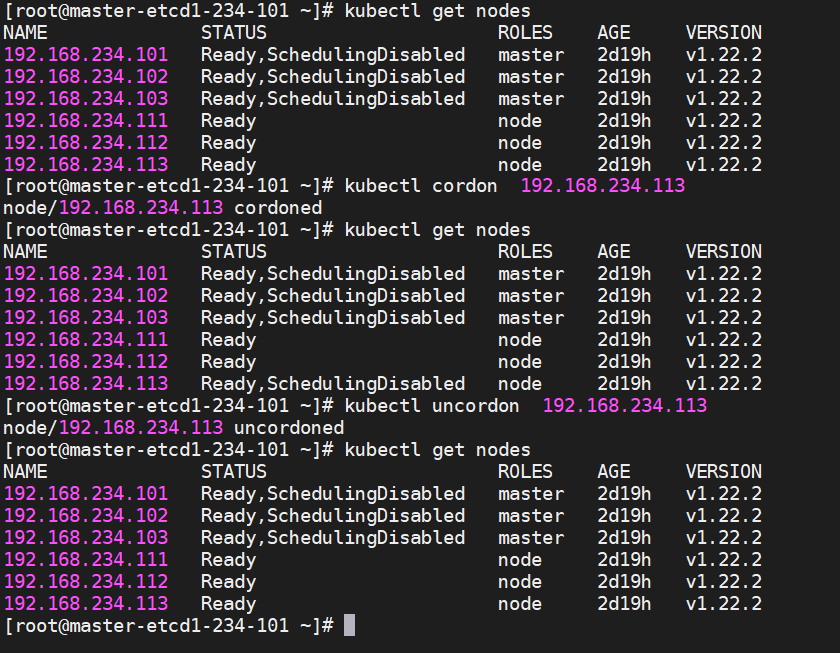

1.cordon的使用

使用此命令后。被cordon的节点不参与以后的pod调度,如果需要重新开始调度需要uncordon命令来解除

[root@master-etcd1-234-101 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.234.101 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.102 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.103 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.111 Ready node 2d19h v1.22.2

192.168.234.112 Ready node 2d19h v1.22.2

192.168.234.113 Ready node 2d19h v1.22.2

[root@master-etcd1-234-101 ~]# kubectl cordon 192.168.234.113

node/192.168.234.113 cordoned

[root@master-etcd1-234-101 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.234.101 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.102 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.103 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.111 Ready node 2d19h v1.22.2

192.168.234.112 Ready node 2d19h v1.22.2

192.168.234.113 Ready,SchedulingDisabled node 2d19h v1.22.2

[root@master-etcd1-234-101 ~]# kubectl uncordon 192.168.234.113

node/192.168.234.113 uncordoned

[root@master-etcd1-234-101 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.234.101 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.102 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.103 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.111 Ready node 2d19h v1.22.2

192.168.234.112 Ready node 2d19h v1.22.2

192.168.234.113 Ready node 2d19h v1.22.2

[root@master-etcd1-234-101 ~]#

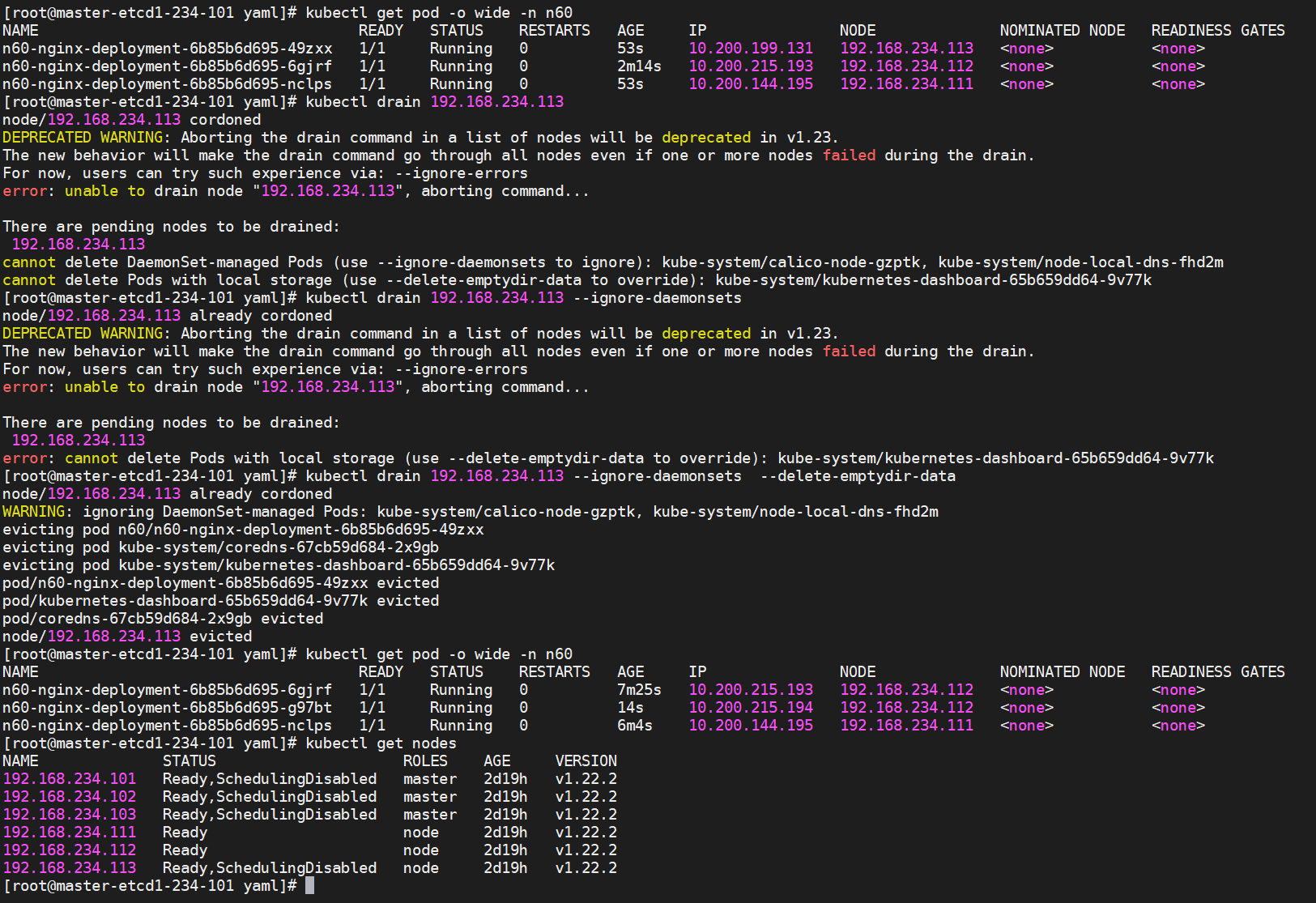

2.drain驱逐命令的使用

[root@master-etcd1-234-101 yaml]# kubectl get pod -o wide -n n60

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

n60-nginx-deployment-6b85b6d695-49zxx 1/1 Running 0 53s 10.200.199.131 192.168.234.113 <none> <none>

n60-nginx-deployment-6b85b6d695-6gjrf 1/1 Running 0 2m14s 10.200.215.193 192.168.234.112 <none> <none>

n60-nginx-deployment-6b85b6d695-nclps 1/1 Running 0 53s 10.200.144.195 192.168.234.111 <none> <none>

[root@master-etcd1-234-101 yaml]# kubectl drain 192.168.234.113

node/192.168.234.113 cordoned

DEPRECATED WARNING: Aborting the drain command in a list of nodes will be deprecated in v1.23.

The new behavior will make the drain command go through all nodes even if one or more nodes failed during the drain.

For now, users can try such experience via: --ignore-errors

error: unable to drain node "192.168.234.113", aborting command...

There are pending nodes to be drained:

192.168.234.113

cannot delete DaemonSet-managed Pods (use --ignore-daemonsets to ignore): kube-system/calico-node-gzptk, kube-system/node-local-dns-fhd2m

cannot delete Pods with local storage (use --delete-emptydir-data to override): kube-system/kubernetes-dashboard-65b659dd64-9v77k

[root@master-etcd1-234-101 yaml]# kubectl drain 192.168.234.113 --ignore-daemonsets

node/192.168.234.113 already cordoned

DEPRECATED WARNING: Aborting the drain command in a list of nodes will be deprecated in v1.23.

The new behavior will make the drain command go through all nodes even if one or more nodes failed during the drain.

For now, users can try such experience via: --ignore-errors

error: unable to drain node "192.168.234.113", aborting command...

There are pending nodes to be drained:

192.168.234.113

error: cannot delete Pods with local storage (use --delete-emptydir-data to override): kube-system/kubernetes-dashboard-65b659dd64-9v77k

[root@master-etcd1-234-101 yaml]# kubectl drain 192.168.234.113 --ignore-daemonsets --delete-emptydir-data

node/192.168.234.113 already cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/calico-node-gzptk, kube-system/node-local-dns-fhd2m

evicting pod n60/n60-nginx-deployment-6b85b6d695-49zxx

evicting pod kube-system/coredns-67cb59d684-2x9gb

evicting pod kube-system/kubernetes-dashboard-65b659dd64-9v77k

pod/n60-nginx-deployment-6b85b6d695-49zxx evicted

pod/kubernetes-dashboard-65b659dd64-9v77k evicted

pod/coredns-67cb59d684-2x9gb evicted

node/192.168.234.113 evicted

[root@master-etcd1-234-101 yaml]# kubectl get pod -o wide -n n60

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

n60-nginx-deployment-6b85b6d695-6gjrf 1/1 Running 0 7m25s 10.200.215.193 192.168.234.112 <none> <none>

n60-nginx-deployment-6b85b6d695-g97bt 1/1 Running 0 14s 10.200.215.194 192.168.234.112 <none> <none>

n60-nginx-deployment-6b85b6d695-nclps 1/1 Running 0 6m4s 10.200.144.195 192.168.234.111 <none> <none>

[root@master-etcd1-234-101 yaml]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.234.101 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.102 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.103 Ready,SchedulingDisabled master 2d19h v1.22.2

192.168.234.111 Ready node 2d19h v1.22.2

192.168.234.112 Ready node 2d19h v1.22.2

192.168.234.113 Ready,SchedulingDisabled node 2d19h v1.22.2

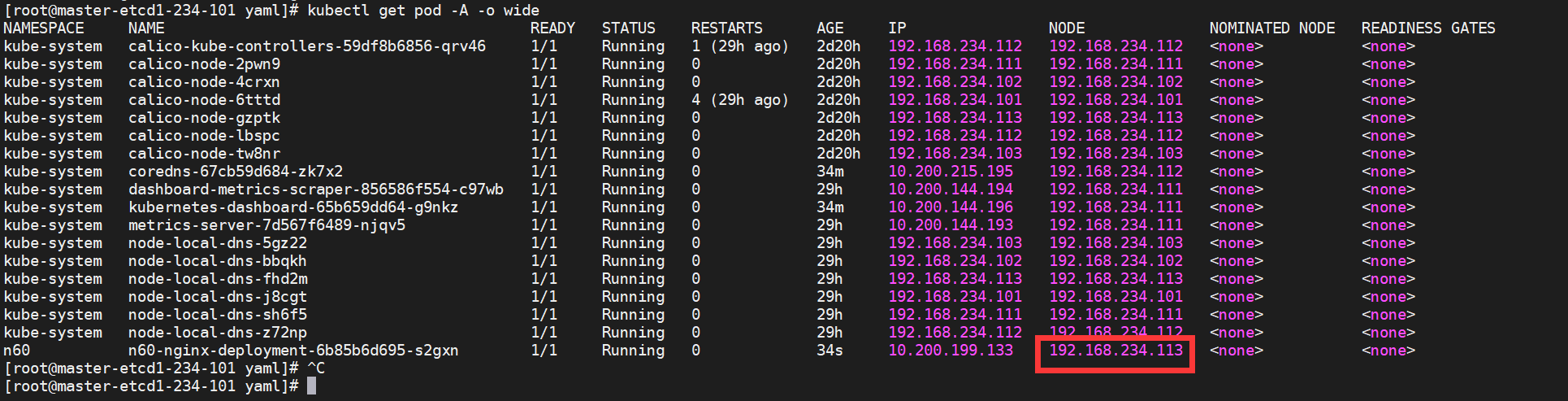

[root@master-etcd1-234-101 yaml]# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-59df8b6856-qrv46 1/1 Running 1 (29h ago) 2d20h 192.168.234.112 192.168.234.112 <none> <none>

kube-system calico-node-2pwn9 1/1 Running 0 2d20h 192.168.234.111 192.168.234.111 <none> <none>

kube-system calico-node-4crxn 1/1 Running 0 2d20h 192.168.234.102 192.168.234.102 <none> <none>

kube-system calico-node-6tttd 1/1 Running 4 (29h ago) 2d20h 192.168.234.101 192.168.234.101 <none> <none>

kube-system calico-node-gzptk 1/1 Running 0 2d20h 192.168.234.113 192.168.234.113 <none> <none>

kube-system calico-node-lbspc 1/1 Running 0 2d20h 192.168.234.112 192.168.234.112 <none> <none>

kube-system calico-node-tw8nr 1/1 Running 0 2d20h 192.168.234.103 192.168.234.103 <none> <none>

kube-system coredns-67cb59d684-zk7x2 1/1 Running 0 31m 10.200.215.195 192.168.234.112 <none> <none>

kube-system dashboard-metrics-scraper-856586f554-c97wb 1/1 Running 0 29h 10.200.144.194 192.168.234.111 <none> <none>

kube-system kubernetes-dashboard-65b659dd64-g9nkz 1/1 Running 0 31m 10.200.144.196 192.168.234.111 <none> <none>

kube-system metrics-server-7d567f6489-njqv5 1/1 Running 0 29h 10.200.144.193 192.168.234.111 <none> <none>

kube-system node-local-dns-5gz22 1/1 Running 0 29h 192.168.234.103 192.168.234.103 <none> <none>

kube-system node-local-dns-bbqkh 1/1 Running 0 29h 192.168.234.102 192.168.234.102 <none> <none>

kube-system node-local-dns-fhd2m 1/1 Running 0 29h 192.168.234.113 192.168.234.113 <none> <none>

kube-system node-local-dns-j8cgt 1/1 Running 0 29h 192.168.234.101 192.168.234.101 <none> <none>

kube-system node-local-dns-sh6f5 1/1 Running 0 29h 192.168.234.111 192.168.234.111 <none> <none>

kube-system node-local-dns-z72np 1/1 Running 0 29h 192.168.234.112 192.168.234.112 <none> <none>

n60 n60-nginx-deployment-6b85b6d695-6gjrf 1/1 Running 0 38m 10.200.215.193 192.168.234.112 <none> <none>

n60 n60-nginx-deployment-6b85b6d695-g97bt 1/1 Running 0 31m 10.200.215.194 192.168.234.112 <none> <none>

n60 n60-nginx-deployment-6b85b6d695-nclps 1/1 Running 0 37m 10.200.144.195 192.168.234.111 <none> <none>

[root@master-etcd1-234-101 yaml]# kubectl get pod -A -o wide |grep 113

kube-system calico-node-gzptk 1/1 Running 0 2d20h 192.168.234.113 192.168.234.113 <none> <none>

kube-system node-local-dns-fhd2m 1/1 Running 0 29h 192.168.234.113 192.168.234.113 <none> <none>

[root@master-etcd1-234-101 yaml]#

uncordon之后一样的可以调度了

[root@master-etcd1-234-101 yaml]# kubectl uncordon 192.168.234.113

node/192.168.234.113 uncordoned

[root@master-etcd1-234-101 yaml]# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

n60 n60-nginx-deployment-6b85b6d695-98jg5 1/1 Running 0 26s 10.200.199.132 192.168.234.113 <none> <none>

n60 n60-nginx-deployment-6b85b6d695-m72bb 1/1 Running 0 26s 10.200.199.134 192.168.234.113 <none> <none>

n60 n60-nginx-deployment-6b85b6d695-s2gxn 1/1 Running 0 26s 10.200.199.133 192.168.234.113 <none> <none>

[root@master-etcd1-234-101 yaml]# kubectl apply -f nginx.yaml

deployment.apps/n60-nginx-deployment configured

service/n60-nginx-service unchanged

[root@master-etcd1-234-101 yaml]# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

n60 n60-nginx-deployment-6b85b6d695-s2gxn 1/1 Running 0 34s 10.200.199.133 192.168.234.113 <none> <none>

[root@master-etcd1-234-101 yaml]#